This problem came up in a current project. Initially the problem appears quite straight forward but it’s actually quite tricky. Let’s start by explaining what I mean by transient links in hierarchical data.

In the original project, I was working with a hierarchy of 5 levels but I think we can simplify that a little and work with just 3. For argument’s sake lets use ‘Document, ‘Section’ and ‘Paragraph’ as the three levels. Although blatantly contrived, this structure could possibly be used in a system managing changes to documents.

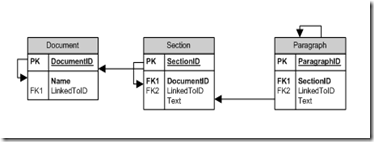

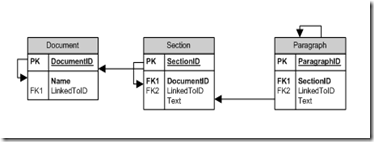

Figure 1

Figure 1 shows a very simple data structure for this situation. Documents are made up of Sections which are made up of Paragraphs. In this system any of our three entities can be copied by creating a new record with the LInkedToID field referencing the Primary Key for the original record. If a Section record is copied, then new Paragraph records are also generated which are copies of the original Section’s Paragraphs. Similarly, if a Document record is copied then its Sections and Paragraphs are also copied.

The first sign of this getting tricky is encountered when we add in the next requirement: when a Section record is copied, the new Paragraph records shouldn’t all link back to where they were copied from – the new records should generate a copy of the same structure of copied Paragraphs which were in the original Section. The root record for the new structure of Paragraphs should reference the original root Paragraph record. Figure 2 contains some XML formatted data showing how the Paragraph records of a parent Section and it’s copy would relate to each other.

Figure 2.

<Paragraph>

<ParagraphID>1</ParagraphID>

<SectionID>1</SectionID>

<LinkedToID>null</LinkedToID>

<Text>This is the text for paragraph 1</Text>

</Paragraph>

<Paragraph>

<ParagraphID>2</ParagraphID>

<SectionID>1</SectionID>

<LinkedToID>1</LinkedToID>

<Text>This is text for paragraph 1 which has been re-versioned.</Text>

</Paragraph>

<Paragraph>

<ParagraphID>3</ParagraphID>

<SectionID>1</SectionID>

<LinkedToID>2</LinkedToID>

<Text>This is the text for paragraph 1 which has been re-versioned a second time.</Text>

</Paragraph>

<Paragraph>

<ParagraphID>4</ParagraphID>

<SectionID>2</SectionID>

<LinkedToID>1</LInkedToID>

<Text>This is the text for paragraph 1 in the copied section.</Text>

</Paragraph>

<Paragraph>

<ParagraphID>5</ParagraphID>

<SectionID>2</SectionID>

<LinkedToID>4</LinkedToID>

<Text>This is the first revision of the paragraph in the copied section.</Text>

</Paragraph>

<Paragraph>

<ParagraphID>6</ParagraphID>

<SectionID>2</SectionID>

<LinkedToID>5</LinkedToID>

<Text>This is the second revision of the paragraph in the copied section.</Text>

</Paragraph>

In the Figure 2 scenario, Section 1 is made up of a single Paragraph which has been revised twice. Section 2 is a revision of Section 1, so the root Paragraph references the root Paragraph of Section 1 while the other Paragraphs of Section 2 link back to Section 2’s root Paragraph.

The requirement is for this pattern to apply for all levels. So if a Document is copied then the Sections will be copied maintaining the references. Likewise, each Section copied to the new Document would also have their Paragraphs generated with the same linked structure of their parent Paragraphs in the original Document.

Now we have some understanding of what I mean by transient links in hierarchical data. Copying data in this way might seem quite simple. In fact initially I thought it was a no brainer and wrote a method similar to the one in Figure 3.

Figure 3.

public void CopyDocument(Document doc)

{

var newDoc = new Document

{

Name = String.Concat(“Copy of “, doc.Name),

LinkedToID = doc.DocumentID

};

dataContext.Document.InsertOnSubmit(newDoc);

dataContext.SubmitChanges();

var sections = dataContext.Section.Where(s=>s.DocumentID==doc.DocumentID);

foreach(var section in sections)

{

var newSection = new Section

{

DocumentID = newDoc.DocumentID,

LinkedToID = section.SectionID,

Text = section.Text

} ;

dataContext.Section.InsertOnSubmit(newSection);

dataContext.SubmitChanges();

var paragraphs = dataContext.Paragraph.Where(p=>p.SectionID==section.SectionID);

foreach(var paragraph in paragraphs)

{

var newParagraph = new Paragraph

{

SectionID = newSection.SectionID,

LinkedToID = paragraph.ParagraphID,

Text = paragraph.Text

};

dataContext.Paragraph.InsertOnSubmit(newParagraph);

dataContext.SubmitChanges();

}

}

}

At first glance this might look like it could do the job but in fact this would link each new record back to the record it was copied from rather than maintaining the original structure.

So how is it done?

The example in Figure 2 is pretty simple. For that situation, the LinkedToID could be followed back to the root Paragraph record and then the children created one at a time pointing to the new root record and the first child respectively. Unfortunately this is a hugely oversimplified example. In actuality the root record may have already been copied into several other Section records already, and have other children which are linked via the LinkedToID but shouldn’t be copied in this process. What’s more, we may not always be copying a root node!

To accomplish the task, we need to introduce two new fields into each entity: ProcessID and CopiedFromID (I would normally use ProcessGuid instead of ProcessID but typing out a guid in a blog is tedious so an integer field will suffice here). Figure 4 contains C# code to accomplish this.

Figure 4.

public void CopyDocument(Document doc)

{

// GetNewProcessID() is a method which returns an

// integer which hasn’t been used as a ProcessID yet.

var processID = GetNewProcessID();

var thisDoc = document;

CopyDocumentMaintainingLinks(thisDoc, processID);

var originalSections = dataContext.Section.Where(s=>

s.DocumentID==doc.DocumentID);

foreach(var section in originalSections)

{

var thisSection = section;

CopySectionMaintainingLinks(thisSection,

thisSection.SectionID, processID);

var originalParagraphs = dataContext.Paragraph

.Where(p=>p.SectionID==thisSection.SectionID);

foreach(var paragraph in originalParagraphs)

{

var thisParagraph = paragraph;

CopyParagraphMaintainingLinks(thisParagraph,

thisParagraph.ParagraphID, processID);

}

}

}

public void CopyDocumentMaintainingLinks(Document doc,

int linkedToID, int processID)

{

var newDoc = new Document

{

Name = String.Concat(“Copy of “, doc.Name),

LinkedToID = linkedToID,

CopiedFromID = doc.DocumentID,

ProcessID = processID

};

dataContext.Document.InsertOnSubmit(newDoc);

dataContext.SubmitChanges();

var linkedDocuments = dataContext.Document

.Where(d=>d.LinkedToID == doc.DocumentID);

foreach(var document in linkedDocuments)

{

var thisDoc = document;

CopyDocumentMaintainingLinks(thisDoc,

newDoc.DocumentID, processID);

}

}

public void CopySectionMaintainingLinks(Section section,

int linkedToID, int processID)

{

var newParentDocument = dataContext.Document

.SingleOrDefault(d=>

d.CopiedFromID == section.DocumentID &&

d.ProcessID == processID);

var newSection = new Section

{

LinkedToID = linkedToID,

CopiedFromID = section.SectionID,

Text = section.Text,

DocumentID = newParentDocument.DocumentID,

ProcessID = processID

};

dataContext.Section.InsertOnSubmit(newSection);

dataContext.SubmitChanges();

var linkedSections = dataContext.Section.Where(s=>

s.LinkedToID==section.SectionID

&& s.DocumentID==section.DocumentID);

foreach(var section in linkedSections)

{

var thisSection = section;

CopySectionMaintainingLinks(thisSection,

newSection.SectionID, processID);

}

}

public void CopyParagraphMaintainingLinks(

Paragraph paragraph, int linkedToID, int processID)

{

var newParentSection = dataContext.Section

.SingleOrDefault(s=>

s.CopiedFromID == paragraph.SectionID &&

s.ProcessID == processID);

var newParagraph = new Paragraph

{

SectionID = newParentSection.SectionID,

LinkedToID = linkedToID,

Text = paragraph.Text,

CopiedFromID = paragraph.ParagraphID,

ProcessID = processID

};

dataContext.Paragraph.InsertOnSubmit(newParagraph);

dataContext.SubmitChanges();

var linkedParagraphs = dataContext.Paragraph

.Where(p=>p.LinkedToID == paragraph.ParagraphID &&

p.SectionID == paragraph.SectionID);

foreach(var para in linkedParagraphs)

{

var thisParagraph = para;

CopyParagraphMaintainingLinks(thisParagraph,

newParagraph.ParagraphID, processID);

}

}

The method CopyDocument is pretty straight forward, the fun bits are in the other three methods. Because the target parent object isn’t necessarily known, we have to find it using the CopiedFromID and the ProcessID fields we added earlier. In this way there are two types of transient relationships defined: linked records and copied records. Of course, a record might be copied dozens of times which means the ProcessID field is needed to restrict the scope of the query to just this copy process.

Another possible pit fall is when selecting the linked items. Because of the way things are copied, we’re only interesting in copying Sections which are in the source Document and for each individual Section we don’t need to copy linked Paragraphs from outside that Section. So we can constrain the query as so.

It’s worth noting that this technique will continue to work no matter how many levels of data exist. Originally I was using 5 levels but there are certainly applications for this technique using more than this. It’s also worth noting that although I’ve written these methods out ‘long hand’ if you like, it would be possible to write a single method to handle all data levels as long as the classes implemented an interface defining the ID, LinkedToID, CopiedFromID and ProcessID fields. This slightly more complex recursive method would need to use reflection to determine what level of data was being copied in any given iteration in order to create the new record.

That’s pretty much all I have to say on this subject. I thought it was an interesting problem which I couldn’t find a solution for elsewhere on the web (although I’m sure they’re out there).

NB: I didn’t copy the code directly from my project so don’t expect it to work perfectly after a copy and paste.